TL;DR 👀

AI coding is moving out of chat windows

ChatGPT Is Becoming an App Platform

AI video generation is now a race, not a novelty

Cloud providers aren’t just hosting AI anymore

AI models are splitting into tiers

YESTERDAY’S IMPOSSIBLE IS TODAY’S NORMAL 🤖

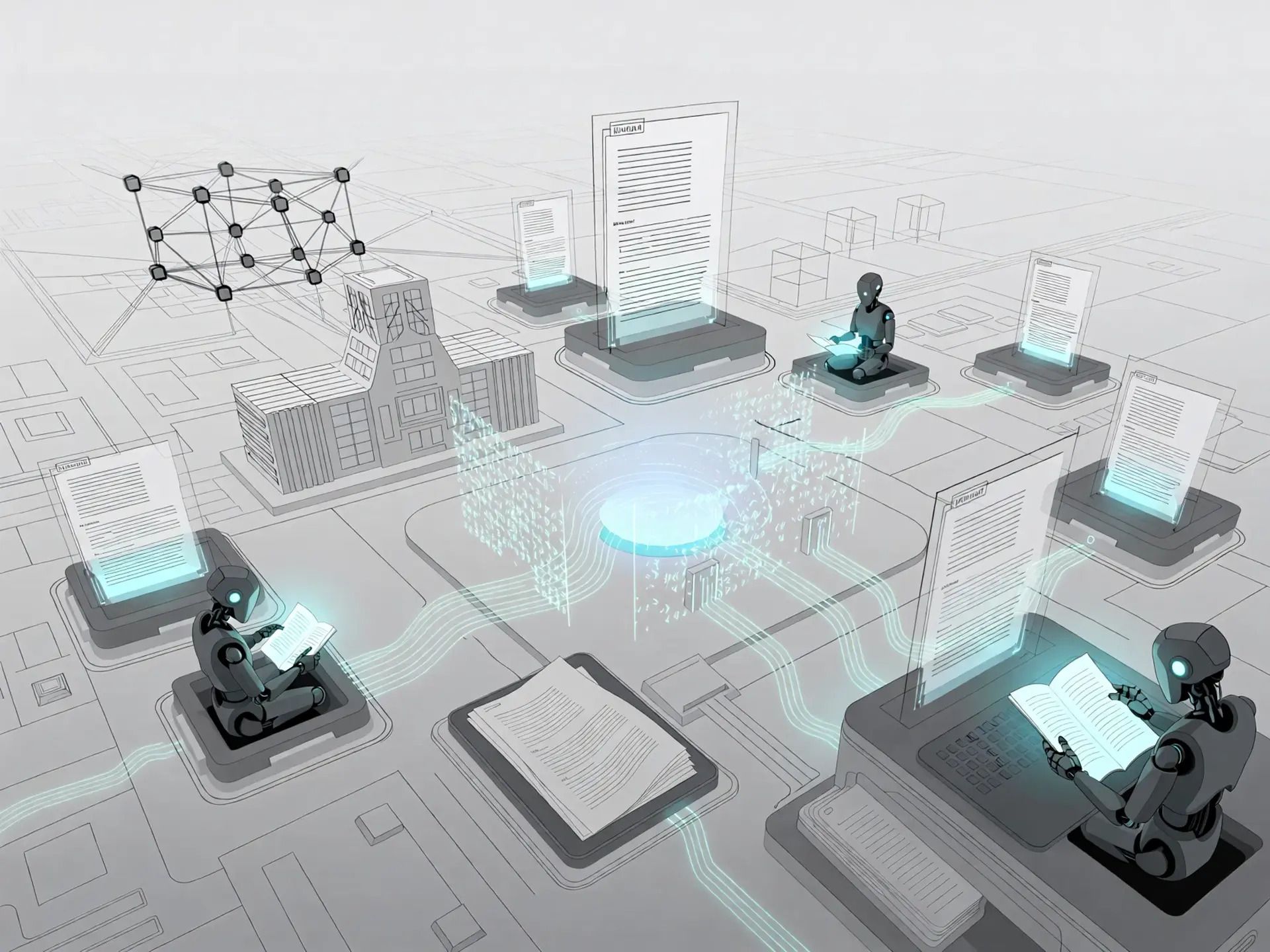

AI coding is moving out of chat windows

Google has quietly released Conductor, a spec-driven development framework built directly into Gemini CLI, and it’s free to use.

Conductor formalizes context engineering by turning intent, requirements, constraints, and architecture decisions into persistent markdown files that live alongside the code. Instead of relying on fragile chat history, AI agents read from a shared, evolving source of truth inside the repository.

The framework is designed for real-world, brownfield projects, where most AI tools struggle. As teams add features or refactor code, Conductor updates project context automatically, giving Gemini consistent awareness of architecture, standards, and goals across time and contributors.

WHY IT MATTERS 🧠

This shifts AI-assisted coding from ad-hoc prompting to structured collaboration.

By anchoring AI behavior to the repo itself, teams get more consistent output, better code quality, and AI that adapts as the project evolves — not resets every session.

ChatGPT Is Becoming an App Platform

OpenAI now allows developers to submit third-party apps directly into ChatGPT.

Instead of relying only on OpenAI-built tools, users will soon access a growing ecosystem of community-built integrations inside the chat interface.

WHY IT MATTERS 🧠

This shifts ChatGPT from a single AI product into a true platform.

If successful, it could create an “AI app store” moment where distribution, not just model quality, becomes the real advantage.

AI video generation is now a race, not a novelty

Multiple models are innovating faster than many realize.

Chinese AI labs are rapidly advancing video-generation models, closing the gap with Western offerings and pushing new capabilities.

Kuaishou’s Kling AI recently launched Video 2.6, the first model capable of generating synchronized audio and visuals in one pass meaning creators can get natural voices, ambient sound, and dynamic scenes from a single prompt.

At the same time, Kling also announced its Video O1 unified model, which combines generation and editing into one workflow, allowing text, images, and video inputs to produce and modify clips seamlessly.

WHY IT MATTERS 🧠

AI video creation just reached a key inflection point: audio, editing, and generation are being combined into unified models.

This lowers barriers for creators, accelerates workflows, and signals video generation is becoming mainstream, not experimental.

Cloud providers aren’t just hosting AI anymore

They’re embedding it into how software is built and operated

At AWS re:Invent, Amazon quietly reframed its AI strategy not around bigger models, but around agents, infrastructure, and ownership.

AWS introduced Frontier Agents, a new class of AI agents designed to work alongside engineering teams. These include agents for software development, security, and DevOps, focusing on code generation, vulnerability detection, and incident response all deeply integrated into the AWS ecosystem.

At the hardware level, Amazon unveiled Trainium 3, its next-generation AI training chip, optimized for cost-efficient large-scale model training inside AWS. Alongside this, AWS announced its AI Factory offering, allowing enterprises to deploy AWS AI infrastructure including GPUs and Trainium chips directly inside their own data centers.

Rather than competing directly on consumer-facing AI tools, AWS is positioning itself as the default operating layer for enterprise AI.

WHY IT MATTERS 🧠

This signals a shift from “AI as a service” to AI as infrastructure.

By bundling agents, chips, and on-prem deployment, AWS is betting that the biggest AI wins will come from control, integration, and scale, not just smarter models.

AI models are splitting into tiers

Raw intelligence is becoming a premium feature

Google has begun rolling out Gemini 3 DeepThink, its most advanced reasoning model to date but only for users on the $250/month Ultra plan.

DeepThink powered many of Google’s recent benchmark wins, including strong performance on Humanity’s Last Exam and ARC-AGI-2, but until now it wasn’t accessible to end users. The model is designed for deep reasoning, long-horizon problem solving, and complex planning rather than fast, conversational responses.

At the same time, Google introduced Workspace Studio, an agent builder that lets users create workflow automations across Gmail, Docs, Drive, and Chat using natural language. Instead of prompting the model repeatedly, users define rules, triggers, and actions effectively embedding AI into daily work processes.

Together, these releases show Google separating reasoning power from workflow automation, and monetizing intelligence at the top end.

WHY IT MATTERS 🧠

We’re entering a phase where not all AI intelligence is equal or universally available.

Top-tier reasoning models are becoming premium tools, while lighter agents handle everyday automation. This mirrors how cloud computing evolved with basic services for everyone and advanced capabilities reserved for high-impact use cases.