TL;DR 👀

The AI Shift You Didn’t Notice

Grok 4.2 is on the Horizon

CES 2026: Smart Bricks & Thinking Cars

The Quiet Push Toward Continual Learning

When Autonomous Agents Start Shipping Work

YESTERDAY’S IMPOSSIBLE IS TODAY’S NORMAL 🤖

The AI Shift You Didn’t Notice

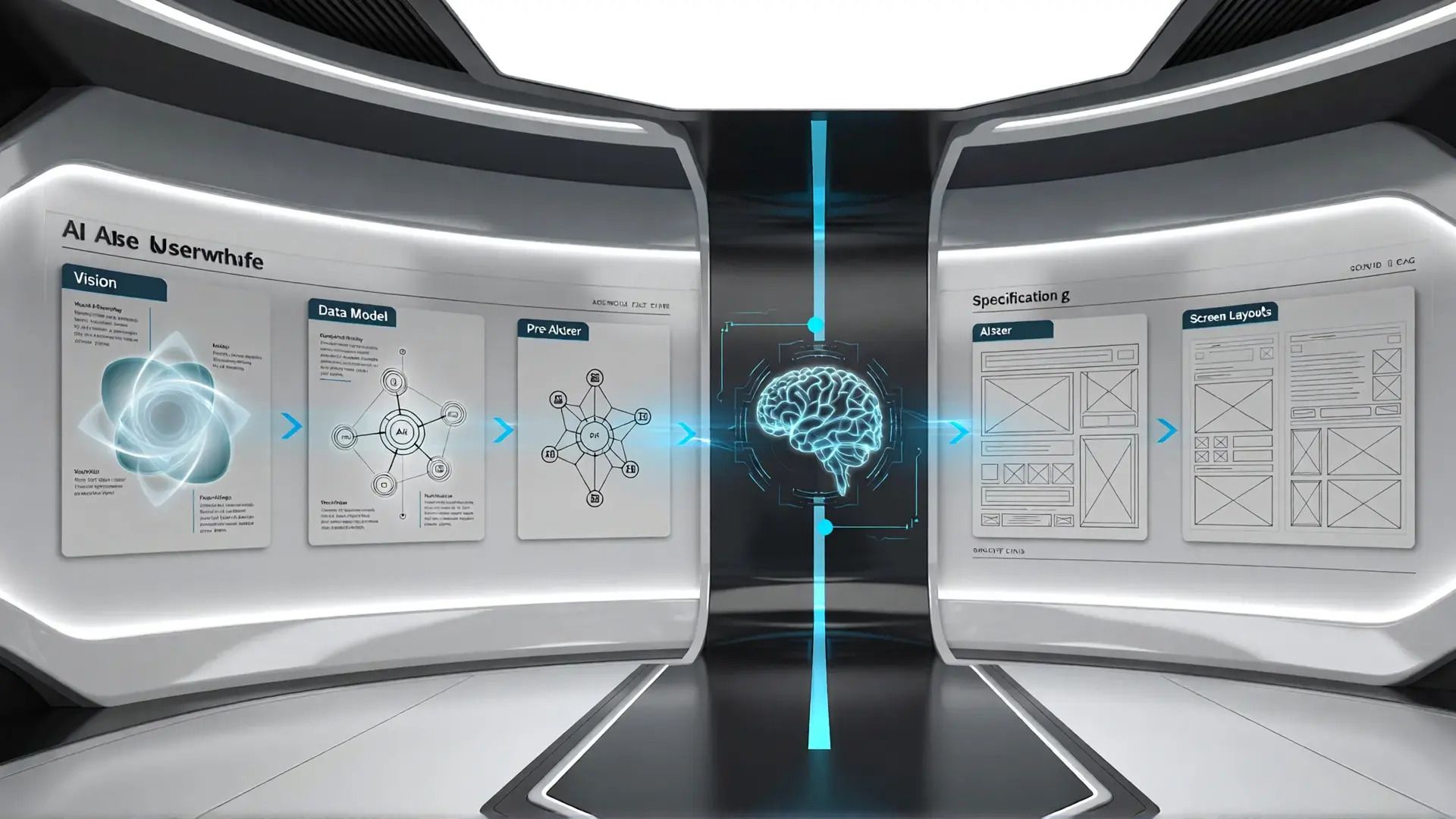

Bridging the gap from design intent to production code, not just generated UI

Most AI coding tools generate frontend UIs quickly but they often feel generic and uninspired because design decisions are made inside the code with no shared specification. This leads to interfaces that look assembled by AI rather than thoughtfully designed.

Design OS introduces a structured design workflow that sits between product vision and implementation. It guides you through defining product goals, structuring data models, and designing UI before code is written, then exports production-ready components for coding agents to implement.

Instead of asking an AI to “guess” what you want, Design OS builds a shared source of truth for what “done” looks like giving AI agents clear specifications and reducing guesswork.

This workflow encourages clearer intent, higher-quality UI components, and a design foundation that can be handed off to any coding agent with confidence.

WHY IT MATTERS 🧠

AI isn’t just making tools faster it’s changing how products are defined and built.

By forcing design thinking before code generation, spec-driven systems like Design OS show a shift toward intent-driven AI output where UIs feel authored, not generated.

This could reshape how teams technical and non-technical collaborate on frontend development in the age of AI.

Grok 4.2 is on the Horizon

For months, Elon Musk has publicly hinted that Grok 4.2 the next major Grok model is imminent, with strengths expected in coding, multimodal output, video understanding, and reasoning. Musk even indicated a January 2026 launch window, and prediction markets now show strong signals for a release before January 15th.

While the official version hasn’t dropped yet, stealth models cloaked as Grok variants are circulating on open platforms like Design Arena and Alam Arena. These include Vortex Shade, Obsidian, and Quantum Crow. Users report fast, high-quality outputs from these models often requiring multiple prompts and each exhibits its own performance traits.

The Obsidian variant, for example, produces interactive UIs and basic game components, albeit imperfectly, while Quantum Crow could be the heavier reasoning-oriented variant. Though these aren’t confirmed as the final Grok 4.2 release, they’re a clear step up from Grok 4.1 in efficiency and speed-to-quality ratio.

However, when directly compared to state-of-the-art models like Gemini 3.0 or Opus 4.5, these models lag slightly in coding finesse despite their competitive efficiency and prospective pricing advantages.

WHY IT MATTERS 🧠

The steady arrival of Grok 4.2-like models before an official launch suggests a shift in how AI capabilities are emerging out of stealth and it may accelerate adoption by developers seeking fast, efficient multi-modal performance.

If Grok 4.2 lands as expected, it could become a primary daily-driver model for many users based on cost-to-capability ratios, especially in realms like coding and creative generation.

CES 2026: Smart Bricks & Thinking Cars

At this year’s Consumer Electronics Show in Las Vegas, tech innovations ranged from playful to profoundly transformative.

LEGO surprised attendees by unveiling Smart Bricks building pieces embedded with sensors, LEDs, sound, and tiny chips that let them interact physically with other bricks, glow, and respond to how they’re moved. This new platform, called LEGO Smart Play, is designed to make creations that react to real-world actions, not just static builds.

On the other end of the innovation spectrum, Nvidia used CES to launch Alpamayo, an AI model family aimed at autonomous driving. Alpamayo models combine vision, language, and reasoning to help vehicles interpret complex situations like human drivers do going beyond traditional sensor reaction to reason through edge cases. It’s positioned as an open platform for developers to build safer, more flexible self-driving systems.

Nvidia says Mercedes-Benz will be among the first partners integrating Alpamayo into road vehicles as early as 2026, highlighting how physical AI is moving from labs to real-world deployment.

WHY IT MATTERS 🧠

CES 2026 isn’t just about clever gadgets it shows how AI is becoming physical, not just digital.

From interactive play that senses real motion (Smart Bricks) to autonomous cars that reason like humans (Alpamayo), the boundaries between software and everyday objects are blurring. This physical AI trend points to a future where intelligence isn’t confined to screens — it’s embedded in toys, robots, and vehicles we use every day.

The Quiet Push Toward Continual Learning

AI progress is shifting from static capability gains to systems that adapt over time.

A growing thread in AI research argues that the next major limitation is not reasoning or scale, but learning over time without forgetting. Researchers at Google Research and DeepMind have published work on continual learning architectures that separate short-term adaptation from longer-term memory updates. These approaches aim to let models incorporate new information selectively, rather than relying solely on fixed training or external memory hacks. While still experimental, the work signals a shift away from static models toward systems that evolve after deployment.

WHY IT MATTERS 🧠

If continual learning becomes practical, it would change how AI systems are updated, evaluated, and trusted in real-world settings. Instead of periodic retraining cycles, models could adapt incrementally, raising new questions around stability, safety, and governance. This direction also blurs the line between training and inference, which could reshape both benchmarks and deployment strategies.

When Autonomous Agents Start Shipping Work

General-purpose AI agents are moving from demos to sustained execution

Platform updates suggest a shift toward end-to-end digital labor

Manus AI, an autonomous general agent positioned as a “virtual colleague,” has released version 1.6, a major update focused on higher task completion rates and reduced human supervision.

The release expands the agent’s ability to handle multi-step research, data analysis, reporting, and deployment workflows in a single run. New capabilities also include mobile application development and a visual design canvas that allows direct image editing alongside generation. Reports have circulated about a potential acquisition by a major tech company, but those claims have not been independently verified.

WHY IT MATTERS 🧠

What’s notable is not any single feature, but the direction: agents are being optimized for reliability, persistence, and parallel execution rather than raw model intelligence. If these systems consistently complete complex work end to end, they begin to resemble scalable remote labor rather than assistive tools. This shifts pressure onto incumbents to compete on orchestration, autonomy, and trust, not just model quality.